Von Tennis zu Pickleball – Starthilfe

Wegen der steigenden Bekanntheit von Pickleball in Deutschland sehe ich in letzter Zeit immer mehr Tennis-Spieler, die mit Pickleball anfangen. Dieser Artikel ist als Starthilfe für euch vielleicht hilfreich.

Die gute Nachricht vorweg: Typischerweise habt ihr mit einem Tennis-Hintergrund sehr gute Voraussetzungen, um mit Pickleball schnell Erfolgserlebnisse zu haben. Und weil Pickleball auf einem kleineren Platz, mit einem langsameren Ball und meistens im Doppel gespielt wird, ist es auch die ideale Alternative, wenn Tennis aus körperlichen Gründen nicht mehr so gut für euch funktioniert.

Schläger

Ich empfehle euch einen Schläger der sogenannten „elongated“ Form (also etwas verlängert und etwas schmaler als die normale Form) mit Carbon-Oberfläche. Diese Schläger sind den euch vertrauten Tennisschlägern vom Handling und vom Spielgefühl ähnlicher als die mit normaler Form. Und die Carbon-Oberfläche bewirkt, dass ihr wenigstens etwas Spin in die Schläge bekommen könnt – wenn auch bei weitem nicht so viel wie vom Tennis gewohnt. Ein Flaggschiff dieses Schlägertyps ist der Joola Perseus, aber natürlich gibt es auch weniger kostspielige Modelle mit ähnlichen Eigenschaften.

Griffhaltung

Ich empfehle den Continental-Griff als universale Griffhaltung. Man ist beim Pickleball meist schnell vorn am Netz, da ist keine Zeit mehr zum Umgreifen. Darum ist dieser Griff, mit dem man schnell Vor- und Rückhand-Volleys im Wechsel spielen kann eine gute Wahl.

Aufschlag

Im Pickleball ist der Aufschlag wesentlich harmloser und leichter zu lernen als im Tennis. Außerdem sind Aufschläger gegenüber den Rückschlägern taktisch im Nachteil. Das bedeutet für euch also eine gewisse (mentale) Umstellung. Ich habe hier einen Artikel zum Aufschlag veröffentlicht. Von der Technik her sollte das für euch aber jedenfalls keine Hürde sein.

Kein Serve & Volley im Pickleball

Stefan Edberg würde Pickleball nicht mögen! Die Regeln machen Serve & Volley bei uns unmöglich, denn der erste Return muss aufspringen. Das bedeutet, dass man nach dem Aufschlag zunächst in Erwartung des Returns hinten bleiben muss, denn der Return kommt typischerweise lang.

Nicht in die Küche treten beim Volley!

Eine Besonderheit beim Pickleball ist die Non-Volley-Zone – die aus einem mir unbekannten Grund gern „Kitchen“ also Küche genannt wird. Das sind 2,13 Meter auf jeder Seite vom Netz:

Wenn man in der NVZ steht, darf man nicht Volley spielen. Das kommt einem zuerst wie eine höchst lästige Schikane vor – es ist doch so natürlich, vorn ans Netz zu laufen und da drauf zu hauen! Ich kann gar nicht sagen, wie oft mir das als Einsteiger passiert ist. Nach ein paar Tagen gewöhnt ihr euch dran. Später werdet ihr die Küche zu schätzen lernen, denn mit einem klugen Ball in die NVZ hat man unter Druck immer eine gute Chance, zurück ins Spiel zu kommen. Man kann sagen, die NVZ ist erfunden worden, damit möglichst lange Ballwechsel entstehen können!

Volleys

Reaktions-Schnelligkeit und Ballgefühl könnt ihr gut von Tennis auf Pickleball transferieren. Der typische Slice-Volley vom Tennis funktioniert zunächst auch beim Pickleball. Allerdings solltet ihr euch im Laufe der Zeit besser den sogenannten Roll-Volley angewöhnen. Dieser Schlag erfolgt mit Topspin und leichtem Handgelenkeinsatz – was für Tennisspieler gewöhnungsbedürftig ist, aber Tischtennisspielern besonders leicht von der Hand geht. Der Vorteil des Roll-Volleys gegenüber dem Slice-Volley: Er kann auch offensiv mit Bällen gespielt werden, die leicht unter Netzhöhe gefallen sind. Und ein harter Roll-Volley landet dank des Topspin auch eher im Feld.

Drives

Eine gute Technik bei Vorhand- und Rückhanddrives kann leicht von Tennis auf Pickleball übertragen werden. Ihr könnt nur nicht soviel Spin in die Bälle bekommen wie beim Tennis. Gewöhnt euch also an, den Ball etwas platter zu treffen. Und seid nicht überrascht, wenn trotz eurer sehr guten Drives der Ball umgehend zurückkommt. Die Ballwechsel sind im Pickleball im Durchschnitt länger als im Tennis, weil das Spiel so entworfen wurde.

Keine Grundlinienduelle

Auch Björn Borg hätte wohl (zunächst) wenig Freude an Pickleball, denn man kann es nicht von der Grundlinie spielen. Hier ist ein Video, das die grundlegende Strategie beim Pickleball erläutert. Typischerweise stehen innerhalb der ersten fünf Bälle alle vier Spieler vorn an der NVZ und spielen Dinks. Übrigens spielt Ivan Lendl mittlerweile begeistert Pickleball!

Dinks?

Ein Dink ist ein kurzer Ball in die NVZ oder kurz hinter deren Linie, der möglichst nicht attackiert werden kann. Dieser Aspekt des Spiels fällt euch vielleicht zunächst etwas schwerer als andere Schlagarten, denn so ein Schlag kommt im Tennis kaum vor. Wenn alle vier Spieler an der NVZ stehen geht es darum, den Ball taktisch klug zu platzieren und auf einen zu hohen Ball der Gegner zu warten. Geduld und Ballsicherheit sind hier Trumpf, oft gewinnt man diese Rallys auch durch unerzwungene Fehler (etwa Ball ins Netz) der Gegner.

Fazit: Machen!

Wenn ihr also als Tennispieler überlegt, mit Pickleball anzufangen, kann ich nur sagen: Unbedingt machen! Ihr habt für diesen Sport ideale Vorkenntnisse und werdet schnell viel Spaß und Erfolgserlebnisse haben. So, wie Steffi Graf, Andre Agassi, und John McEnroe 😉

Pickleball Übung: Grosser Hosenträger

Diese Übung eignet sich gut zum Aufwärmen für etwas fortgeschrittene Spieler – denn man muss dabei den Ball schon relativ genau und sicher im Spiel halten können:

Vier Spieler stehen im Halbfeld. Spieler A startet mit einem Cross-Ball zu D. D spielt longline zu B. B spielt cross zu C. C spielt longline zu A. Und dann wieder von vorn.

A und B spielen also immer cross und C und D immer longline. Motivation und Spaß steigen, wenn jeder Spieler beim Schlag laut mitzählt: A: „1!“, D: „2!“, B: „3!“, C:“4!“, A:“5!“ etc.

Ziel ist es, bis 12 zu kommen. Passiert vorher ein Fehler, wird wieder von vorn begonnen. Ist die 12 erreicht, kann man die Schlagrichtung wechseln. Jetzt spielen A und B immer longline und C und D spielen immer cross.

Die Übung ist beendet, wenn zweimal die 12 erreicht worden ist.

Ist man nur zu zweit, kann man einen kleinen Hosenträger spielen. Dabei spielt ein Partner immer gerade und der andere immer abwechselnd auf die Vorhand und die Rückhand. Diese Variante kann allerdings etwas anstrengend werden, wenn man sie im Halbfeld oder gar von der Grundlinie spielt 😉

Der Aufschlag im #Pickleball

Das Beste vorweg: Der Aufschlag im Pickleball ist leicht zu lernen und auszuführen. Anders als etwa im Tennis oder Tischtennis, wo man typischerweise viel Zeit mit der Übung des Aufschlags verbringt, um konkurrenzfähig zu sein. Die Regeln erschweren es zudem beträchtlich, dass der Aufschlag zur spielentscheidenden „Waffe“ werden kann. Asse oder zwingender Vorteil nach dem Aufschlag sind daher ziemlich selten.

Regeln

Die Regeln zur Positionierung gelten sowohl für den Volley-Serve als auch für den Drop-Serve:

Zum Zeitpunkt, wenn der Ball beim Aufschlag auf den Schläger trifft, müssen die Füße des aufschlagenden Spielers hinter der Grundlinie und innerhalb verlängerten Linien der jeweiligen Platzhälfte sein:

Der Oberkörper des Spielers und der Ball dürfen sich dabei innerhalb des Spielfelds befinden. Außerdem darf der Spieler das Spielfeld betreten, unmittelbar nachdem der Ball den Schläger verlassen hat.

Vorher aber nicht:

Schon das Berühren der Grundlinie mit der Fußspitze beim Aufschlag ist ein Fehler.

Man darf auch nicht beliebig weit außen stehen:

Der linke Fuß ist hier außerhalb der verlängerten Linien der linken Platzhälfte, weshalb dieser Aufschlag nicht regelgerecht wäre.

Wer schlägt wann wohin auf, und was ist mit der NVZ?

Es muss jeweils das diagonal gegenüberliegende Feld getroffen werden, wobei der Ball nicht in der NVZ landen darf. Die Linien gehören dabei zur NVZ: Ein Ball auf die hintere Linie der NVZ ist ein Fehler. Genauso gehören die Linien zum Aufschlagfeld: Ein Ball auf die Grundlinie oder ein Ball auf die Außenlinie ist also kein Fehler. Wird der Punkt gewonnen, wechselt der Aufschläger mit seinem Partner die Seite. Verliert der erste Aufschläger die Rally, macht sein Partner von der Seite weiter, wo er grad steht. Verliert auch der zweite Aufschläger die Rally, wechselt der Aufschlag auf das andere Team. Die Zählweise und die jeweilige Positionierung der Spieler hab ich in diesem Artikel behandelt.

Volley-Serve

Das ist derzeit der beliebteste Aufschlag und ursprünglich auch der einzig erlaubte Aufschlag. Der Ball wird dabei aus der Hand aufgeschlagen.

Der Schlägerkopf muss dabei eine Bewegung von unten nach oben ausführen, wie in 4-3 zu sehen.

Der Schlägerkopf darf sich zum Zeitpunkt des Auftreffen des Balls nicht über dem Handgelenk befinden (4-1 zeigt die korrekte Ausführung, 4-2 ist ein häufig zu beobachtender Fehler).

Außerdem muss der Ball unterhalb der Taille des Aufschlägers getroffen werden, wie in 4-3 zu sehen.

Das obige Bild stammt aus dem Official Rulebook der USAP.

Alle Regeln zum Volley-Serve sollen im Grunde sicherstellen, dass dieser Aufschlag eben nicht zum spielentscheidenden Vorteil wird. Im folgenden Clip sehen wir einen Aufschlag von Anna Leigh Waters, der momentan besten Spielerin der Welt:

Diese Art des Aufschlags ist bei den Pros am häufigsten zu sehen: Volley-Serve, Top-Spin, tief ins Feld gespielt. Wir sehen aber auch, dass ihre Gegenspielerin den Aufschlag ohne große Mühe returniert. Asse oder direkt aus dem Aufschlag resultierender Punktgewinn sind im Pickleball ziemlich selten. Ganz im Gegensatz etwa zu Tennis und Tischtennis.

Drop-Serve

Diese Art des Aufschlags ist erst seit 2021 erlaubt. Abgesehen von den oben beschriebenen Regeln zur Positionierung der Füße gibt es beim Drop-Serve nur eine weitere Regel: Der Ball muss aus der Hand fallengelassen werden. Hochwerfen oder nach unten Stoßen/Werfen des Balls ist nicht erlaubt. Insbesondere darf der Ball auf beliebige Art geschlagen werden. Das macht diesen Aufschlag besonders Einsteigerfreundlich, weil man kaum die Regeln verletzen kann.

Ich habe dem Drop-Serve bereits diesen Artikel gewidmet.

Abgesehen von den Regeln – wie sollte man aufschlagen?

Es gibt hier zwei grundsätzliche Herangehensweisen:

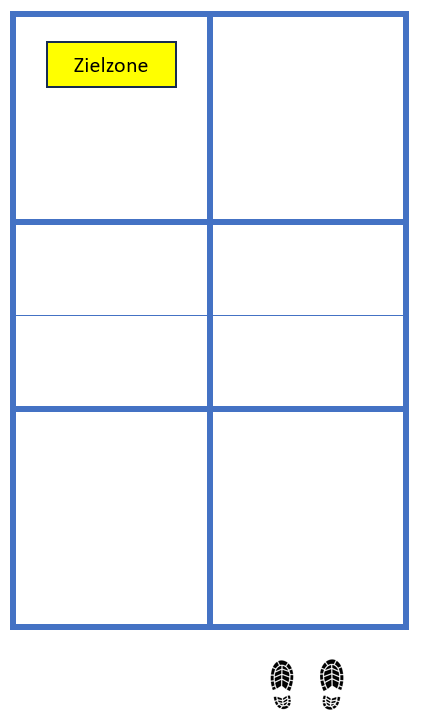

Die einen sagen, weil man mit dem Aufschlag ohnehin selten einen direkten Punkt macht, sollte man ihn nur möglichst sicher in das hintere Drittel des Felds reinspielen:

Ins hintere Drittel, weil die Rückschläger sonst zu leicht einen starken Return spielen und die Aufschläger hinten halten können. Mit der gelben Zielzone ist es unwahrscheinlich, dass der Aufschlag aus geht.

Die anderen (zu denen ich auch gehöre) sagen: Mit dem Aufschlag kann man ruhig etwas Risiko eingehen. Schließlich kann das Return-Team keinen Punkt machen. Es ist okay, wenn von 10 Aufschlägen 2 ausgehen und die übrigen 8 es den Rückschlägern schwer machen, uns hinten zu halten. Der eine oder andere direkte Punkt sollte auch dabei sein. Darum sehen meine Zielzonen so aus:

Die meisten Aufschläge gehen Richtung Zielzone 1, ab und an mal einer nach 2 und 3. Die roten Zonen sind deutlich näher an den Linien als die gelbe, was natürlich die Gefahr eines Ausballs erhöht.

Im Allgemeinen kann man zum Aufschlag im Pickleball sagen:

Länge ist wichtiger als Härte oder Spin. Ein entspannt in hohem Bogen ins hintere Drittel des Aufschlagfelds gelobbter Ball macht dem Rückschläger mehr Probleme als ein harter Topspin in die Mitte. Kurze Aufschläge sind sporadisch eingesetzt als Überraschungswaffe gut, ansonsten erleichtern sie es dem Rückschläger nur, nach vorn an die NVZ zu kommen.