Beiträge getaggt mit exadata

How #Exadata benefits your Data Warehouse

A migration of your Data Warehouse to Exadata will deliver most likely the combined benefits of Hybrid Columnar Compression, Smart Scan and Storage Indexes. That means better performance with less storage space consumption. Let’s see an example:

SQL> desc sales_noexa

Name Null? Type

----------------------------------------- -------- ----------------------------

ID NUMBER

PRODUCT CHAR(25)

CHANNEL_ID NUMBER

CUST_ID NUMBER

AMOUNT_SOLD NUMBER

ORDER_DATE DATE

SHIP_DATE DATE

SQL> select count(*) from sales_noexa;

COUNT(*)

----------

80000000

SQL> select sum(bytes)/1024/1024 as mb from user_segments where segment_name='SALES_NOEXA';

MB

----------

5520

This table is partitioned by ORDER_DATE with one partition per year. Without Exadata, performance is as follows:

SQL> select count(*),avg(amount_sold) from sales_noexa where channel_id=2;

COUNT(*) AVG(AMOUNT_SOLD)

---------- ----------------

16000000 5000

Elapsed: 00:00:11.06

SQL> select sum(amount_sold) from sales_noexa where ship_date = to_date('01.01.2011','dd.mm.yyyy');

SUM(AMOUNT_SOLD)

----------------

40000000

Elapsed: 00:00:06.07

SQL> select sum(amount_sold) from sales_noexa where order_date = to_date('01.01.2011','dd.mm.yyyy');

SUM(AMOUNT_SOLD)

----------------

40000000

Elapsed: 00:00:00.15

There is no index, so each statement above was a Full Table Scan. The last was much faster because of Partition Pruning. Now the same on Exadata:

SQL> desc sales_exa

Name Null? Type

----------------------------------------- -------- ----------------------------

ID NUMBER

PRODUCT CHAR(25)

CHANNEL_ID NUMBER

CUST_ID NUMBER

AMOUNT_SOLD NUMBER

ORDER_DATE DATE

SHIP_DATE DATE

SQL> select count(*) from sales_exa;

COUNT(*)

----------

80000000

SQL> select sum(bytes)/1024/1024 as mb from user_segments where segment_name='SALES_EXA';

MB

----------

1574

The table is way smaller although it contains the very same rows. That’s because I used Hybrid Columnar Compression to create this table:

create table sales_exa (id number, product char(25), channel_id number, cust_id number, amount_sold number, order_date date, ship_date date)

partition by range (order_date)

(

partition p1990 values less than (to_date('01.01.1991','dd.mm.yyyy')) compress for archive high,

partition p1991 values less than (to_date('01.01.1992','dd.mm.yyyy')) compress for archive high,

partition p1992 values less than (to_date('01.01.1993','dd.mm.yyyy')) compress for archive high,

partition p1993 values less than (to_date('01.01.1994','dd.mm.yyyy')) compress for archive high,

partition p1994 values less than (to_date('01.01.1995','dd.mm.yyyy')) compress for archive high,

partition p1995 values less than (to_date('01.01.1996','dd.mm.yyyy')) compress for archive high,

partition p1996 values less than (to_date('01.01.1997','dd.mm.yyyy')) compress for archive low,

partition p1997 values less than (to_date('01.01.1998','dd.mm.yyyy')) compress for archive low,

partition p1998 values less than (to_date('01.01.1999','dd.mm.yyyy')) compress for archive low,

partition p1999 values less than (to_date('01.01.2000','dd.mm.yyyy')) compress for archive low,

partition p2000 values less than (to_date('01.01.2001','dd.mm.yyyy')) compress for archive low,

partition p2001 values less than (to_date('01.01.2002','dd.mm.yyyy')) compress for query high,

partition p2002 values less than (to_date('01.01.2003','dd.mm.yyyy')) compress for query high,

partition p2003 values less than (to_date('01.01.2004','dd.mm.yyyy')) compress for query high,

partition p2004 values less than (to_date('01.01.2005','dd.mm.yyyy')) compress for query high,

partition p2005 values less than (to_date('01.01.2006','dd.mm.yyyy')) compress for query high,

partition p2006 values less than (to_date('01.01.2007','dd.mm.yyyy')) compress for query low,

partition p2007 values less than (to_date('01.01.2008','dd.mm.yyyy')) compress for query low,

partition p2008 values less than (to_date('01.01.2009','dd.mm.yyyy')) compress for query low,

partition p2009 values less than (to_date('01.01.2010','dd.mm.yyyy')) compress for query low,

partition p2010 values less than (to_date('01.01.2011','dd.mm.yyyy')) compress for query low,

partition p2011 values less than (to_date('01.01.2012','dd.mm.yyyy')),

partition p2012 values less than (to_date('01.01.2013','dd.mm.yyyy')),

partition p2013 values less than (to_date('01.01.2014','dd.mm.yyyy')),

partition p2014 values less than (to_date('01.01.2015','dd.mm.yyyy')),

partition p2015 values less than (to_date('01.01.2016','dd.mm.yyyy')),

partition p2016 values less than (to_date('01.01.2017','dd.mm.yyyy')),

partition p2017 values less than (to_date('01.01.2018','dd.mm.yyyy'))

);

The older the partitions, the stronger the compression – that saved about 4 gig of storage space in this case. How about the performance?

SQL> set timing on

SQL> select count(*),avg(amount_sold) from sales_exa where channel_id=2;

COUNT(*) AVG(AMOUNT_SOLD)

---------- ----------------

16000000 5000

Elapsed: 00:00:05.83

SQL> select sum(amount_sold) from sales_exa where order_date = to_date('01.01.2011','dd.mm.yyyy');

SUM(AMOUNT_SOLD)

----------------

40000000

Elapsed: 00:00:00.14

The first Full Table Scan is twice as fast as before now because of Smart Scan, while the Partition Pruning shows about the same effect as before. A real whopper is that one:

SQL> select sum(amount_sold) from sales_exa where ship_date = to_date('01.01.2011','dd.mm.yyyy');

SUM(AMOUNT_SOLD)

----------------

40000000

Elapsed: 00:00:00.22

That was more than 6 seconds without Exadata before! Why is that so much faster now, almost as good as the Partition Pruning performance?

SQL> connect adam/adam

Connected.

SQL> select sum(amount_sold) from sales_exa where ship_date = to_date('01.01.2011','dd.mm.yyyy');

SUM(AMOUNT_SOLD)

----------------

40000000

SQL> select name,value/1024/1024 as mb from v$statname

natural join v$mystat where name='cell physical IO bytes saved by storage index';

NAME MB

---------------------------------------------------------------- ----------

cell physical IO bytes saved by storage index 1385.64844

I connected newly to initialize v$mystat. The above shows that not only do we need to do less I/O because of the decreased size of the table due to compression and not only did we have a Smart Scan but additionally a Storage Index could be used to further significantly limit the amount of data the cells had to scan through. That is possible because the partitioning on ORDER_DATE leads to a physical sort on disk not only on the ORDER_DATE column but also on the SHIP_DATE column. SHIP_DATE is usually very close to ORDER_DATE. And this ordered way the data is stored is the foundation of the successful usage of Storage Indexes.

Overall, space consumption went down while performance went up 🙂

The above demonstration has been done on our Accenture Enkitec Exadata lab environment, using an X3-2 quarter rack. A more recent Exadata generation respectively a half or full rack would most likely deliver even better results. The tests with SALES_NOEXA have been done by putting CELL_OFFLOAD_PROCESSING=FALSE. That way, I get the hardware-related performance of an Exadata machine without the secret sauce of the Exadata software on the cells that enables them to do Smart Scan in particular.

Now does the demonstrated apply only to Data Warehouse systems? At least to some degree, the answer is yes: Hybrid Columnar Compression is not suitable for many subsequent updates; you wouldn’t want to use that for a table with Online Transaction Processing (OLTP) access pattern. You may have some rather static tables in an OLTP system that may benefit from it, though. Smart Scan requires Full Table Scan respectively Full Index Scan done as Direct Reads. Again, that is not the typical access pattern of an OLTP system although you may have some situations where that happens also there. Same for Storage Indexes – they can only get involved on behalf of a Smart Scan.

Exadata Patching Introduction

These I consider the most important points about Exadata Patching:

Where is the most recent information?

MOS Note 888828.1 is your first read whenever you think about Exadata Patching

What is to patch with which utility?

Expect quarterly bundle patches for the storage servers and the compute nodes. The other components (Infiniband switches, Cisco Ethernet Switch, PDUs) are less frequently patched and not on the picture therefore.

The storage servers have their software image (which includes Firmware, OS and Exadata Software) exchanged completely with the new one using patchmgr. The compute nodes get OS (and Firmware) updates with dbnodeupdate.sh, a tool that accesses an Exadata yum repository. Bundle patches for the Grid Infrastructure and for the Database Software are being applied with opatch.

Rolling or non-rolling?

This the sensitive part! Technically, you can always apply the patches for the storage servers and the patches for compute node OS and Grid Infrastructure rolling, taking down only one server at a time. The RAC databases running on the Database Machine will be available during the patching. Should you do that?

Let’s focus on the storage servers first: Rolling patches are recommended only if you have ASM diskgroups with high redundancy or if you have a standby site to failover to in case. In other words: If you have a quarter rack without a standby site, don’t use rolling patches! That is because the DBFS_DG diskgroup that contains the voting disks cannot have high redundancy in a quarter rack with just three storage servers.

Okay, so you have a half rack or bigger. Expect one storage server patch to take about two hours. That summarizes to 14 hours (for seven storage servers) patching time with the rolling method. Make sure that management is aware about that before they decide about the strategy.

Now to the compute nodes: If the patch is RAC rolling applicable, you can do that regardless of the ASM diskgroup redundancy. If a compute node gets damaged during the rolling upgrade, no data loss will happen. On a quarter rack without a standby site, you put availability at risk because only two compute nodes are there and one could fail while the other is just down.

Why you will want to have a Data Guard Standby Site

Apart from the obvious reason for Data Guard – Disaster Recovery – there are several benefits associated to the patching strategy:

You can afford to do rolling patches with ASM diskgroups using normal redundancy and with RAC clusters that have only two nodes.

You can apply the patches on the standby site first and test it there – using the snapshot standby database functionality (and using Database Replay if you licensed Real Application Testing)

A patch set can be applied on the standby first and the downtime for end users can be reduced to the time it takes to do a switchover

A release upgrade can be done with a (Transient) Logical Standby, reducing again the downtime to the time it takes to do a switchover

I suppose this will be my last posting in 2014, so Happy Holidays and a Happy New Year to all of you 🙂

Why Write-Through is still the default Flash Cache Mode on #Exadata X-4

The Flash Cache Mode still defaults to Write-Through on Exadata X-4 because most customers are better suited that way – not because Write-Back is buggy or unreliable. Chances are that Write-Back is not required, so we just save Flash capacity that way. So when you see this

CellCLI> list cell attributes flashcachemode

WriteThrough

it is likely to your best 🙂

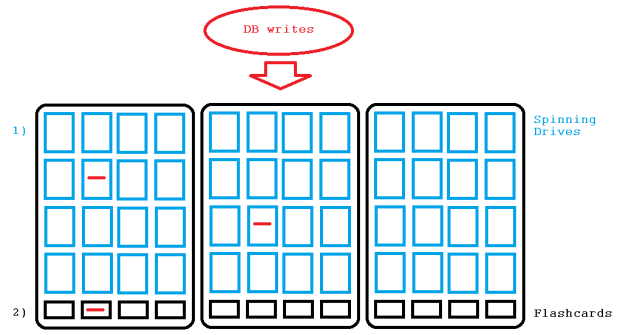

Let me explain: Write-Through means that writing I/O coming from the database layer will first go to the spinning drives where it is mirrored according to the redundancy of the diskgroup where the file is placed that is written to. Afterwards, the cells may populate the Flash Cache if they think it will benefit subsequent reads, but there is no mirroring required. In case of hardware failure, the mirroring is already sufficiently done on the spinning drives, as the pictures shows:

That changes with the Flash Cache Mode being Write-Back: Now writes go primarily to the Flashcards and popular objects may even never get aged out onto the spinning drives. At least that age out may happen significantly later, so the writes on flash must be mirrored now. The redundancy of the diskgroup where the object in question was placed on determines again the number of mirrored writes. The two pictures assume normal redundancy. In other words: Write-Back reduces the usable capacity of the Flashcache at least by half.

Only databases with performance issues on behalf of writing I/O will benefit from Write-Back, the most likely symptom of which would be high numbers of the Free Buffer Waits wait-event. And Flash Logging is done with both Write-Through and Write-Back. So there is a good reason behind turning on the Write-Back Flash Cache Mode only on demand. I have explained this just very similar during my present Oracle University Exadata class in Frankfurt, by the way 🙂