The Flash Cache Mode still defaults to Write-Through on Exadata X-4 because most customers are better suited that way – not because Write-Back is buggy or unreliable. Chances are that Write-Back is not required, so we just save Flash capacity that way. So when you see this

CellCLI> list cell attributes flashcachemode

WriteThrough

it is likely to your best 🙂

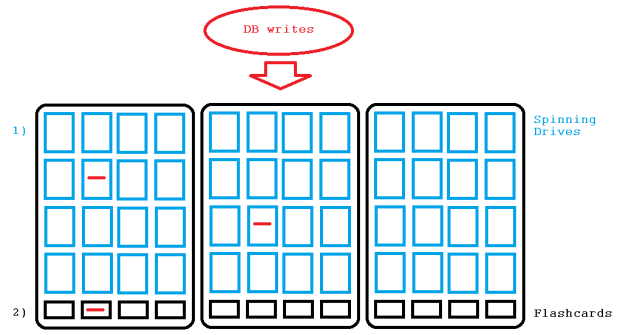

Let me explain: Write-Through means that writing I/O coming from the database layer will first go to the spinning drives where it is mirrored according to the redundancy of the diskgroup where the file is placed that is written to. Afterwards, the cells may populate the Flash Cache if they think it will benefit subsequent reads, but there is no mirroring required. In case of hardware failure, the mirroring is already sufficiently done on the spinning drives, as the pictures shows:

That changes with the Flash Cache Mode being Write-Back: Now writes go primarily to the Flashcards and popular objects may even never get aged out onto the spinning drives. At least that age out may happen significantly later, so the writes on flash must be mirrored now. The redundancy of the diskgroup where the object in question was placed on determines again the number of mirrored writes. The two pictures assume normal redundancy. In other words: Write-Back reduces the usable capacity of the Flashcache at least by half.

Only databases with performance issues on behalf of writing I/O will benefit from Write-Back, the most likely symptom of which would be high numbers of the Free Buffer Waits wait-event. And Flash Logging is done with both Write-Through and Write-Back. So there is a good reason behind turning on the Write-Back Flash Cache Mode only on demand. I have explained this just very similar during my present Oracle University Exadata class in Frankfurt, by the way 🙂

#1 von kevinclosson am August 6, 2014 - 20:51

Hi Uwe,

This is a very good post. I hope you’ll allow a comment.

I find that there remains confusion for some Exadata users/prospects in the case when Write-back *is* enabled. Perhaps you can clarify. As you point out the redundancy of the disk group dictates how many copies of a write-back block are stored in flash. In the case of HIGH redundancy this means a write of, say, 8KB will result in 3 copies of the 8KB block to be cached in Exadata Write-back cache (in the flash cards of the cells that have the HDD backing-store for the blocks). However, what is not clear to some folks is the fact that upon aging the writes out (the write-back or in common storage parlance the de-staging) only the primary mirror copy remains in cache. So the „triple footprint“ is only until the de-staging to HDD. Am I correct on that understanding?

#2 von Uwe Hesse am August 6, 2014 - 20:58

Hi Kevin, yes that is true: After aging out from the Flashcards, there is no need any more to retain the mirrored copies there – and else we wouldn’t make room in the Flashcache for other more useful objects.

#3 von kevinclosson am August 6, 2014 - 21:06

Thanks Uwe…so does a glut of read activity force de-staging or is it fixed percent threshold or other such?

#4 von Uwe Hesse am August 6, 2014 - 21:15

An LRU-algorithm determines both: Whether the newly read data should be flash cached and if yes, what needs to be aged out to make room. Therefore, popular objects may never hit the spinning drives anymore while less popular objects get aged out.

#5 von Emre Baransel am August 7, 2014 - 09:23

Hi Uwe,

Very clear, as always. In a new installation do you advise any database statistics that we can use, in order to decide using Exadata WriteTrough or WriteBack?

#6 von Uwe Hesse am August 7, 2014 - 13:35

Hi Emre, when the databases you plan to move onto the new Exadata platform have Free Buffer Waits in the top 5 wait-events and significant DB Time is presently spent on it during production hours, you should probably turn on Write-Back Flash Cache Mode.

#7 von ugurcan am August 7, 2014 - 16:32

Hi Uwe,

If my cache mode is Writeback,you said, so the writes on flash must be mirrored now (that’s ok) and for this reason Write-Back reduces the usable capacity of the Flashcache at least by half.

Just I’m wondering,this mirroring is on Flash Card or on Spinning Disks ?

If this mirroring is on Spinning disk,likely we’ve more usable Flashcache ,right ?

Thanks

ugurcan

#8 von Uwe Hesse am August 7, 2014 - 21:04

Hi ugurcan, with Write-Back, the mirroring is done on the Flashcards because that is where the write I/O (of popular objects) goes to. If these objects age out onto the spinning drives, the mirroring is done there.

#9 von Robin Chatterjee (@robinchatterjee) am August 11, 2014 - 18:14

Hi Uwe,

I think a major point you are missing is that when you restart the storage cells in write through mode you effectively wipe the slate clean. Assuming you are rebooting them every quarter for storage server patching that means 3 months of acquired information on the best things to keep in cache is immediately lost. That i feel is the biggest downside of write through. With writeback all the information acquired by the database is retained indefinitely. This will definitely help performance.

#10 von John am Juli 21, 2015 - 14:41

Thanks for sharing Uwe. Couple of questions if you please:

1) Does this mean that if using ASM HIGH redundancy we will have 3 copies in the Flash Cache?

2) Say we have a 1/4 RAC with 3 storage cells and ASM HIGH redundancy. Will an „active write-back“ blocks have a copy each storage cell? Even though Flash Cells do not „communicate“ with each other?

3) Is there any way we can query the status/ test this?

thanks

#11 von Robin Chatterjee (@robinchatterjee) am Juli 1, 2016 - 04:08

Hi Uwe , with the X5 and higher it is even more imperative that write back become the default because there is a lot more flash iops than hdd iops , ths leads to write bottlenecks in case of write-through since flash write iops is in the millions and disk write iops is in the thousands. apart from all the things i said in my comment above the fact that flash sizes are now pretty huge means that the multiple copy penalty s becoming less and less important vis a vis the hdd iops bottleneck. I recently analysed a customer who were maxing out their cell physical disk write iops whereas the flash write iops was less than 50% of the potential.

with flash write iops of a full rack being of the order of 2 million iops and disk iops being of the order of 28 thousand iops the discrepancy is far too great

#12 von Robin Chatterjee (@robinchatterjee) am Juli 1, 2016 - 04:10

Hi John, you can dump the object ids of objects in flash cache. you would have to take a snapshot across all cells and then correlate.

p.s uwe the datasheet actually mentions 4 million flash write iops not 2 million , but i am going with the conservative expectation of normal redundancy 🙂